Notes on Search and AI

More questions than answers...

What to say about the future of AI and search?

Obviously, we don’t know. But I’m going to jot down a few thoughts.

🤖🤖🤖

Clicks to Websites will go up/down/sideways?

There’s an interesting spectrum of responses to the new “generative search experiences” and what it means for clicks out to websites.

The super optimistic outlook says:

“Generative search & AI is going to absolutely explode the number of search queries per user per day. Just like mobile search and youtube search are largely additive to “regular” search, we’re going to see more search queries than ever and eventually more outbound clicks than ever before”

The pragmatic positive outlook says:

“Look, Google’s ads business model relies on showing you links and driving clicks to websites. So however the future interface changes there’s going to be a fundamental notion of links and clicks that will continue to exist. So there will be changes big and small to the number of clicks but ultimately it’s not going to be a “50% decline in all search” scenario”

The pragmatic pessimist outlook says:

“Sure, search click curves are going to change - but actually for A LOT of queries you simply don’t need to click out - the query is well served by an LLM model. While many transactional queries will survive, the informational and exploratory queries will be completely re-shaped. This will lead to broad-scale changes across the media landscape in particular.

The super pessimistic outlook says:

“LLMs are going to fundamentally rewrite how people interact with the web to the point that search itself diminishes as a behavior - leading not only to dramatically fewer clicks to websites but even Google’s core ads business model will come under threat.”

Whichever outlook you have, I think one thing is becoming obvious - with how fast open source and small local models are getting - I don’t think search is going to be threatened by a membership model (a la $20 / month for chatGPT). I think search is going to remain free and ad supported for some time to come.1

🤖🤖🤖

Just-in-time Interfaces

There’s another outlook though - maybe the era of search-first is just over? What I mean is this - you could argue that today we start our queries in search (just like we used to start our search in directories many moons ago). Whether it’s voice search, Google search etc we start there.

But you could argue that we’re entering an era where we start our queries with an LLM/assistant interface. Something that results in search some of the time - but NOT all the time. Some times it results in novel, new interfaces - whether it’s a just-in-time UI that is assembled to support your task completion or whether it’s a collaborative document / code environment.

I’ve been playing around with a workflow that goes something like:

- Tell GPT what I want to do

- Ask GPT to think about what interface would enable that task completion

- Ask GPT to give me the code for that interface

- Copy and paste that code into Replit

This is all quite manual and a little bit slow today - but when it gets it right, the results are magical and truly novel. I could easily imagine a new OpenAI beta playground environment that tried to build interfaces on the fly for you. It’s all there today.

See: the tldraw experiment that lets you draw a UI and have GPT build a working version.

(Aside: Google search results are essentially adaptive UI designed for the query at this point! I mean look at the custom ecommerce UI all over a standard shopping query these days.)

🤖🤖🤖

What new kinds of queries will exist?

Ok, so if we have a different kind of box to type or talk into - how will this differ from a search box? Well, I think the affordances of natural language will change the kinds of behaviors that we find ourselves familiar with.

Here’s a simple example. People used to search for things with specific location modifiers - think “restaurants in NYC” but as it became obvious and predictable that Google already knows where the query is coming from, we stopped searching for “restaurants in NYC” and started searching for “restaurants near me”.

The “near me” version of queries became the dominant version about 10 years ago.

So the affordances of the search results changes our behavior.

It’s fun to imagine whole new kinds of search queries / questions that you simply wouldn’t type into Google because it wouldn’t make sense. Things like:

- Why am I feeling anxious today?

- What should I work on today?

- Should I take the job offer I was just sent?

- Can I afford a holiday this year?

- Give me a poem to start my day

I’m betting that as we get comfortable talking to LLMs we’ll slowly get comfortable asking more personal, more spiritual, more emotional, more goal-oriented questions and discussions.

(Obviously, I’m guessing the real queries of the future will look nothing like this - it’ll be some other weird second order effect that no one anticipated)

🤖🤖🤖

We’re going to need new ideas for measurement

Two things we’re going to need to invent new ideas around measurement for: search quality and search rankings.

Google hasn’t had any serious competition for decades - and so collectively I think we’ve all kind of given up on measuring search quality? Even Google I think has become lazy in how they measure search quality in recent years.

But it’s suddenly a serious question to ask who did it better for a particular category of search.

(In 10 minutes I built a little side by side for Google and Perplexity. Play around here: https://llm-search-comparison.replit.app/)

Of course - it’s not quite true that no one is measuring search quality. Daniel S. Griffin has a really interesting collection of articles, academic papers and resources around “search audits”. Ok, so I guess we all have some homework to do.

But/and - because the affordances are changing and search behavior is changing we’re still going to need some careful thinking around how we measure the quality of search results.

Meanwhile, in the SEO industry, we’ve been relying on “rank tracking” for a long time (too long?). I’m excited that this new era of generative search experiences might usher in some innovation from the SEO tool providers around how we measure performance in search. It’s needed.

🤖🤖🤖

Actually, there’s a third thing we’re going to need to invent new measurement standards for: clicks. AI-assisted clicks. Do they show in analytics? Do bots declare themselves? When a bot browses on your behalf what user-agent does it use? Are bots allowed to fetch URLs on your behalf if they do it locally? Or only via direct user-initiated command?

🤖🤖🤖

The Skill of Searching

Here’s another way of thinking about the impact of LLMs.

You know how some people are just better at searching than others? If you’re reading this article you’re probably thinking that you’re good at searching. Maybe your significant other turns to you to help you find the right thing, maybe you feel like you’re competent at weeding through 10 blue links to find the most relevant result. You’re fluent and competent at the internet.

Being good at searching is a combination of query formulation, looking for signs, recognizing trusted sites etc.

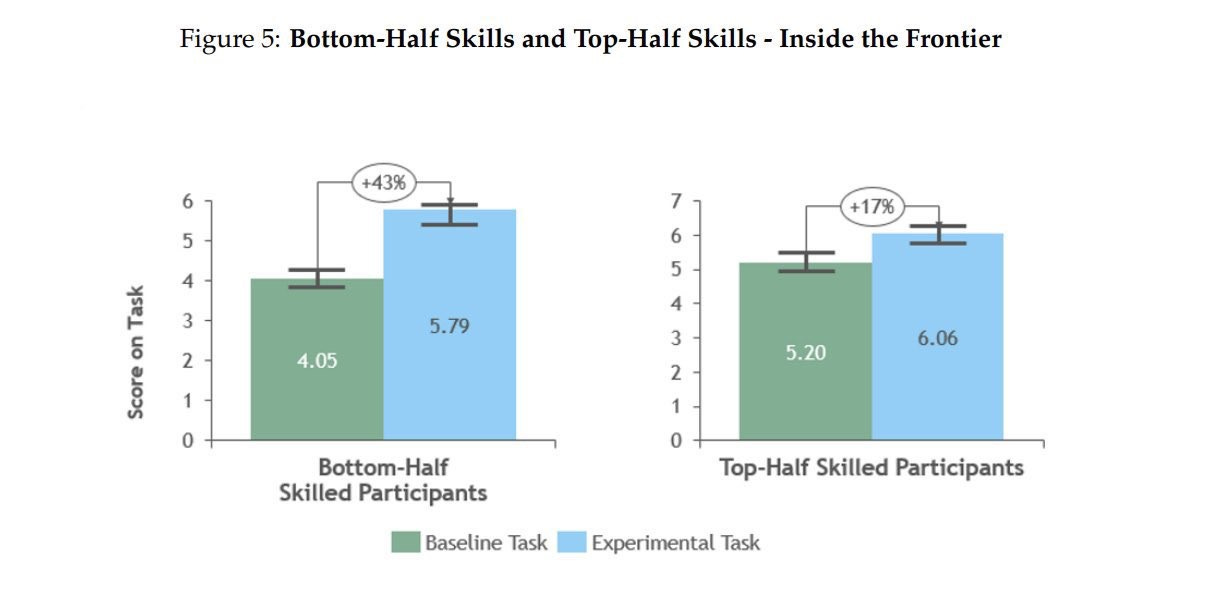

Well, studies find that for knowledge worker tasks, LLMs lift the below average people the most and close the gap between top and bottom performers:

Across a set of 18 tasks designed to test a range of business skills - from analysis to idea generation to persuasion - consultants who had previously tested in the lower half of the group increased the quality of their outputs by 43% with AI help while the top half only gained 17%. Where previously the gap between the average performances of top and bottom performers was 22%, it shrunk to a mere 4% once the consultants used GPT-4.

So, maybe our model for how search will evolve is instead of imagining your average person searching for a generic query, imagine them being as good as you are at searching. How would that change your ideas for the future of search behavior?

After all - maybe you’re the obsessive nerd who opens 15 tabs for every search. Well, everyone can do that now:

🤖🤖🤖

Context & Persistence

It’s clear to me that two missing “obvious” ideas from the LLM chat interfaces we have today are context and persistence.

Context - because although you can write your own custom GPTs and so on, today even Bard doesn’t have access to your calendar, your email and your chats. Surely the eventual assistant will have much more awareness of who you are, what you’re working on, where you are etc. This is part of the bull case for Apple/Google dominance in the AI era.

Persistence - why can’t I ask GPT to remind me next month about something? Or to notify when some future thing happens? This feels like Google Alerts (or Yahoo Pipes) on steroids and it’s simply a quirk of the technology today that they don’t do this. I think before the year is out GPT will have a way of sending you alerts, notifications and reminders.

My friends at Addition are building a kind of LLM-powered Google Alerts here in early beta: Insightly

🤖🤖🤖

Of course - the future will be agents, doing tasks with you and for you. Acting on your behalf. I think we’re a little ways off from that happening for anything beyond basic tasks today - but it’ll come, and when it does we’ll need new paradigms for all kinds of things.

🤖🤖🤖

Publishers & Poisoned Wells

Do you remember the poisoned well argument around Google AMP? Basically publishers hated Google AMP because it broke the rules of the web - Google acting like a hosted intermediary between Google and publishers. But of course Google argued that if publishers didn’t have such shitty websites covered in ads and bloat and crap, AMP wouldn’t be needed.

Well.

I think we’re going to see a very similar story play out with Google vs publishers. The publishers right now are up in arms that Google could use LLMs to answer queries, thus reducing clicks to the “open web”. And yet - in conversations I’m having with publishers and brands behind the scenes, companies are desperate for traffic and many are planning for large-scale deployment of AI-written content.

Honestly - I’m not sure this is a bad thing! There’s a lot of very terrible human-written content out there.

But of course this is going to trigger a bit of an arms race where Google points to all the AI-generated bloat and spam from publishers as an excuse for introducing their AI experiences.

🤖🤖🤖

Here’s one idea - everyone is focused on generating indexable content for Google to consume and rank.

Maybe the future is indexable experiences.

No, I don’t care to explain myself.

Ok alright, well I think most content that brands create should actually be an experience. A little web-app that helps you. For example for a query like “how to get slime out of carpet”2 - I don’t want an article with 1000 words of SEO-focused garbage with the history of slime, headers answering all the “FAQs” like “what is slime”.

What I want is a little slime helper that says “what kind of slime is it? how long has it been there? do you have vinegar?” then gives me some help to guide me through.

I mean, what I really want is to yell at my kids. Maybe an LLM can help me parent better in the future. Maybe an LLM can stop my kids putting slime on the carpet in the future. See above: new kinds of queries.

No, I don’t know how “indexable experiences” will work. Do you?

🤖🤖🤖

The only certain thing is uncertainty

Here’s a nice analogy from Sean Blanda around how the fundamental predictability of platforms has changed:

Much like stable national business and predictable tax policy attract investment, stable internet platforms attract makers and creators. I'm more willing to build my audience on your service if I can expect a reasonable amount of stability and predictability. For more than a decade, Google, Twitter, YouTube, and LinkedIn had stable rules and incentives.

However, thanks to a mix of AI and changing market conditions, each of these platforms is now antagonistic to linking to outside sources. Their reliance on humans to create chum for the algorithm may be temporary as AI and machine-generated content are now easier to create.

As a creator, journalist, or brand, you can no longer reliably pour money or labor into a platform and have it reliably return an audience that you can turn into customers. As a result, audience growth has slowed across the board.

I’ve seen personally how much this lack of predictability has paralyzed client budgets. Most companies are not very good at operating in uncertainty. (I’m guessing Vaughn Tan is very much in demand right now).

🤖🤖🤖

Some default recommendations that I’m using with clients I work with:

- Put AI in the loop for content creation today, but be very thoughtful in creating AI content without humans in the loop.

- I’m more interested in AI-generated experiences than I am AI-generated content. AI-generated experiences can start very simply but are surprisingly scaleable. Remember that intent / information gain / task completion is always the goal.

- Developing AI capabilities is more important than knowing exactly where it shows up or how you’ll use it. This typically means starting with a small pilot, and carefully talking about it and sharing it internally.

- CRM is a huge opportunity for AI (though I suppose CRM was always a huge opportunity - but it was neglected even before AI).

- Now is a great time to carefully consider the question of “quality content” and “quality experiences” - for a long time companies operated without a strong definition or measurement of quality. “Humans made it” was a proxy for “it’s good” but now we need new benchmarks and metrics for what “good”. We need that before we can start using AI.

- Yes, once you have a clear understanding of “quality” an LLM can be very useful in quality control.

🤖🤖🤖

We invented computers and they changed everything. And then one day we discovered they can talk…

🤖🤖🤖

Beep boop. What are you thinking about?

Update from a cold room later that same day, Jan 31st: Jared Hecht has a nice little riff on agent native applications that provokes the question of whether web3 enters the chat. This piece helped me think a bit more deeply about the role of aggregators, data layers and protocols as it relates to search.

Here’s an interesting perspective. For a keyword like “best laptop”, Google could already remove the middle-man of publishers regurgitating 4,000 comparison pieces. But they choose not to. For how long? And in an agent-first world we might need more headless brands where attribution and kickbacks and royalties are paid out to various players along the value chain…

Update #2: Feb 5th: I saw this excellent demo of just-in-time interfaces assembled using AI - starts around 3min in.

-

Which means that one of the most interesting areas of research right now is how you put ads inside an LLM! The greatest minds of our generation etc etc. But this is a trillion dollar problem and it doesn’t seem like there’s much progress yet? ↩

-

Yes, an actual search query from 1am last night. Thanks kids. ↩

August 22, 2025

June 27, 2025

Google is Grounded and Needs to Learn How to Soar

March 21, 2025

This post was written by Tom Critchlow - blogger and independent consultant. Subscribe to join my occassional newsletter: