How do you measure good content?

In search of a framework or process to judge editorial... quality?

As a consultant working with content companies, I often find myself bridging editorial and business. That squishy area between editorial values and business metrics. There’s a simple question that quickly arises:

How do you measure content quality?

I think we can all agree that page views aren’t the way to measure content, nor social media shares. Equally you can’t just rely on the intuition of the editor in chief… So what metrics do you use to evaluate content?

This question of content quality is an important one. Whether you’re a media company producing content or a brand using content as marketing it’s important to have an understanding of whether the content you’re producing is “good”.

I’m not looking to reduce everything to numbers here. I’m just looking for some useful frameworks or mental models to guide a team that is producing content to produce better content. Especially as a consultant coming in to help evaluate an organization and push them forward.

(And no, this isn’t a conversation about happyclappy Medium.)

The people I’ve talked to seem to have a pretty strong idea of what good content is. And pretty much everyone (myself included) thinks a strong “editor in chief” role can really help steer editorial quality.

But there’s very little I’ve found about how you actually manage content production and how you might evaluate content quality. So… this is a request? Please send me whatever you’ve got!

Below are some ideas and conversation starters for what I think is an important idea for anyone in the “content business”.

Defining an editorial mission is a good start…

In my presentation The State of Content from earlier this year, I made the link between defining an editorial mission and hiring a strong editor in chief to champion and bring that to life. I included this quote from the NYT 2020 report:

Our most successful forays into digital journalism […] have depended on distinct visions established by their leaders — visions supported and shaped by the masthead, and enthusiastically shared by the members of the department. […] These departments with clear, widely understood missions remain unusual. Most Times journalists cannot describe the vision or mission of their desks.

I love this, and I still think this is one of the best ways to produce strong content that connects with an audience.

But… I’m left feeling slightly unsatisfied. And, in particular, as a consultant I’m often in the weeds with a client trying to help them define an editorial mission and build processes and teams that can stick to it.

And… I don’t have a good framework to turn to that helps a team to keep on track? Do you have one?

Defining an audience and understanding their needs is also good…

I’ve seen several examples of teams that tried to define an editorial mission without properly defining an audience (or audiences). And every time they came up short. Also from my state of content presentation, I love this quote (actually a twitter thread) from Kyle Monson:

Whether your employer is a publisher, a brand, or an agency. If you’re making garbage, help them do better. The good ones will listen to you and incorporate your expertise. In my experience, most of them really do want to do it right.

And believe it or not, super engaged communities often want to hear from the brands who serve them. And super engaged brands want to provide communities with things other than just “content”.

So if you find yourself writing pablum, narrow the audience to a core group of smart people who care about what the brand has to say. And then convince the brand to say something meaningful to that small, smart audience. Godspeed.

Of course, don’t get me wrong… this is hard! Especially the part about narrowing your focus. But defining an audience helps shape your overall editorial strategy but doesn’t help you judge individual pieces.

Perhaps interestingness as a measure can guide us?

When I put out a call on Twitter my friend Toby Shorin linked me to this Ribbonfarm piece books are fake and this quote in particular:

Interestingness is not a fixed property, but a move in a conversation between what everyone in the audience already knows (the “assumption ground“) and the surprise reveal. Being interesting means that the audience shares, or can be made to share, the common knowledge that the author seeks to undermine. Interestingness is a function of whatever body of knowledge is already assumed to be true. Therefore, it can be difficult to see the interestingness – the point – of a fragment of an alien conversation.

Oh! This sounds useful - can we extend this into a measure of interestingness? Perhaps by shining a light on and agreeing upon a definition or outline of the assumption ground?

I think there’s a thread to be explored here. My friend Sean Blanda said:

I like this. And I don’t know of many organizations or individuals that care about “advancing the conversation” so I think there’s definitely something useful here. But it’s still a long way from being a real framework or process you can follow with any rigor… In a chat conversation between myself, Sean and Brian Dell we discussed whether it’s possible to add any rigor here. I love this from Brian:

Ok now we’re getting somewhere! But, it’s that soft space. How do you make it less soft? How do you poke it? How do you make it less soft? I asked Jim Babb and he said:

I’m not sure there are clear answers here. Seems that a lot of people are defining, measuring and thinking about this in lots of different ways.

Surveys & Feedback

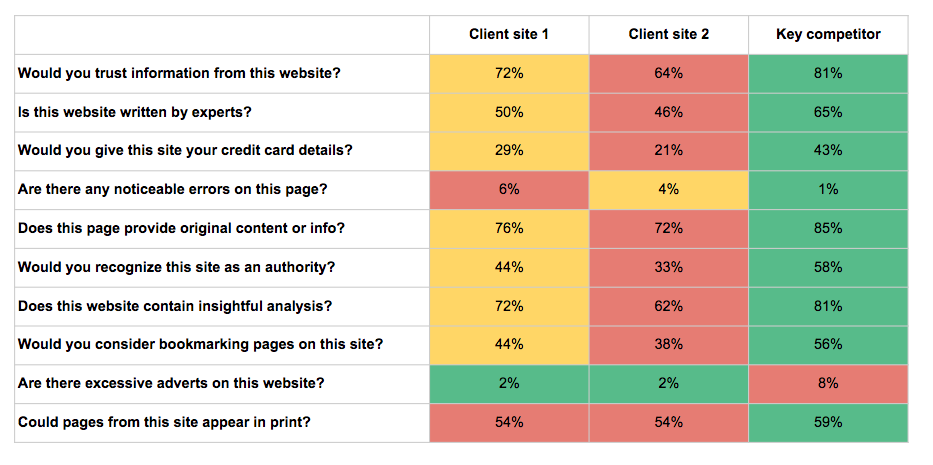

One tool in my arsenal that I’m relying on is a variation of the Google Panda Questionnaire. In short, it’s a 10-question survey that you can run on any web-page with real humans to get a measure of “quality”.

It’s useful for a few key reasons:

- The questions came originally from Google’s quality rater guidelines doc so the framework has some relevance. Companies understand why you want to use it as a benchmark.

- It quantifies otherwise subjective measures like how much users trust the website.

- It’s generic and you can use it for any site.

The results from this kind of survey can be very powerful for company execs. This is an example from a client:

The Panda survey is a useful tool1 but it’s geared towards evaluating site design and UX, though it does include aspects of content too.

Maybe there’s a version of the Panda survey for evaluating content interestingness?

What would a version of the panda survey look like specifically around judging editorial content? Could we construct an Editorial Value Survey (EVS for short) that looked like the following questions:

- Did you find value in reading this content?

- Does the opening paragraph make you want to keep reading?

- Would you have finished reading this piece of content if you were reading by yourself?

- Would you bookmark this content to come back to later?

- Would you share this piece of content with a friend or colleague?

- Would you share this piece of content on social media?

- Would you describe this content as [insert Q relating to our editorial mission see above]

- Would this piece of content make you want to learn more about who wrote it?

Or, perhaps we need more of an internal process or framework?

My solution here doesn’t necessarily need to be a user survey. It could just be an internal process of getting a group of employees and/or writers together and creating a scoring methodology for content. I had one client who could never define good content, so instead created a benchmark for good enough content. By defining the worse possible thing they could still publish it actually gave them a clearer focus for evaluating content.

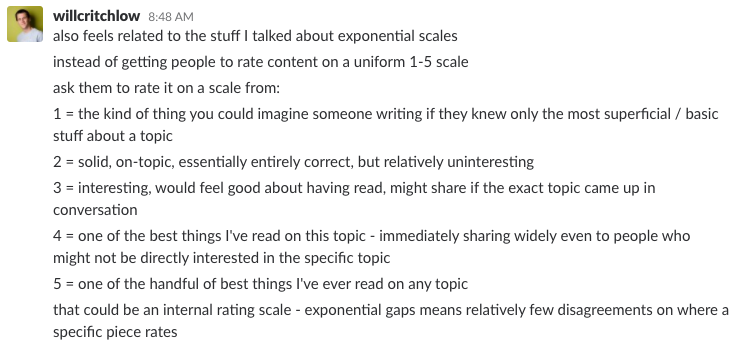

An internal framework could be a short list of questions to interrogate our content and we can have a small team internally rate content on the scale. My brother Will suggested that an exponential scale can sometimes be useful for these internal ratings to better catch outliers:

This exponential scale might be useful. But perhaps all that’s needed is a simple checklist? Can we distill our editorial strategy into a set of checklist items to ensure that content aligns with our goals?

What else?

Ok, I’ll wrap up here. What am I missing? What frameworks, processes and metrics do you use to measure content quality in your content organization?

(Aside as a closing note: this is all related to branding in the age of content - how we monitor, shape and think about perception and “brand” for companies that produce content at scale… Definitely more to unpack here.)

–

Footnotes:

-

In my research I’ve come to think Google based some of their methodology on the System Usability Scale originally developed in 1986. More details on the SUS here ↩

August 22, 2025

June 27, 2025

Google is Grounded and Needs to Learn How to Soar

March 21, 2025

This post was written by Tom Critchlow - blogger and independent consultant. Subscribe to join my occassional newsletter: